Data handling

for DSPM platforms

DSPM deployment options

As organizations research Data Security Posture Management, it’s important to understand the pros and cons of the three methods below:

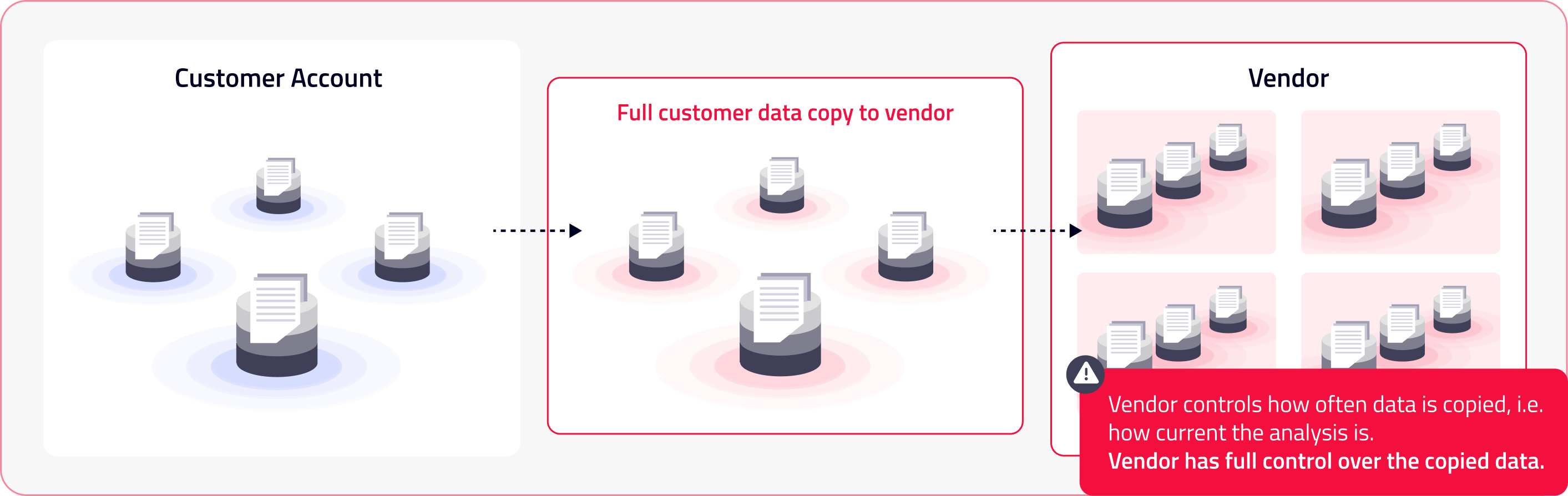

1. Extract and scan

Risky

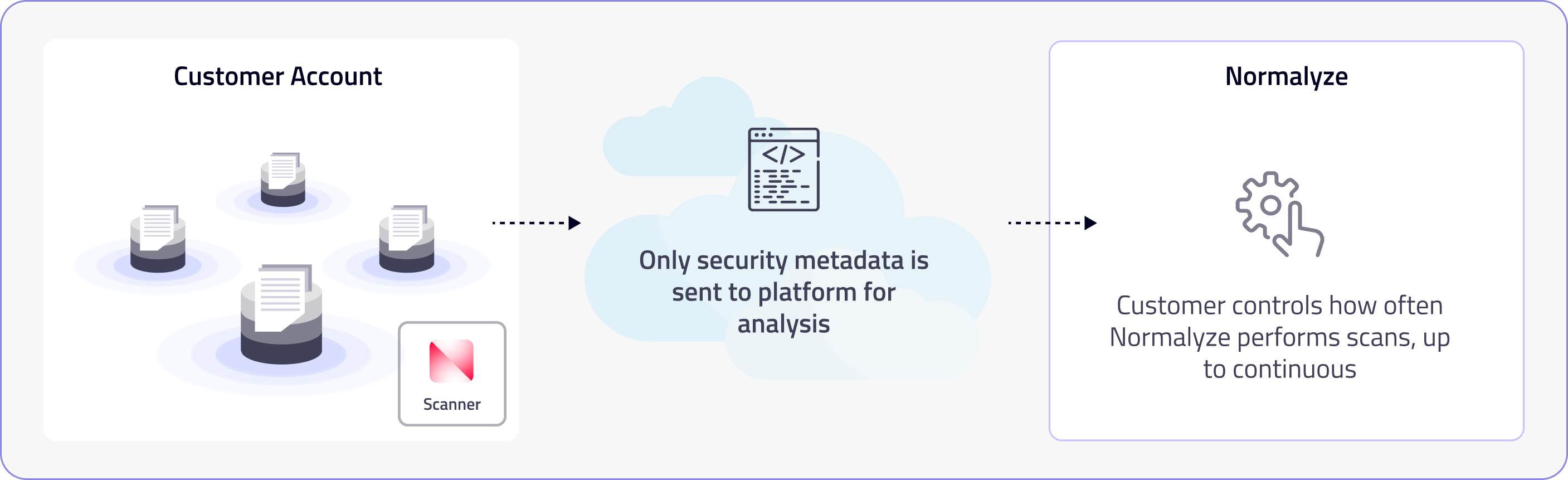

2. In-place scanning

Preferred

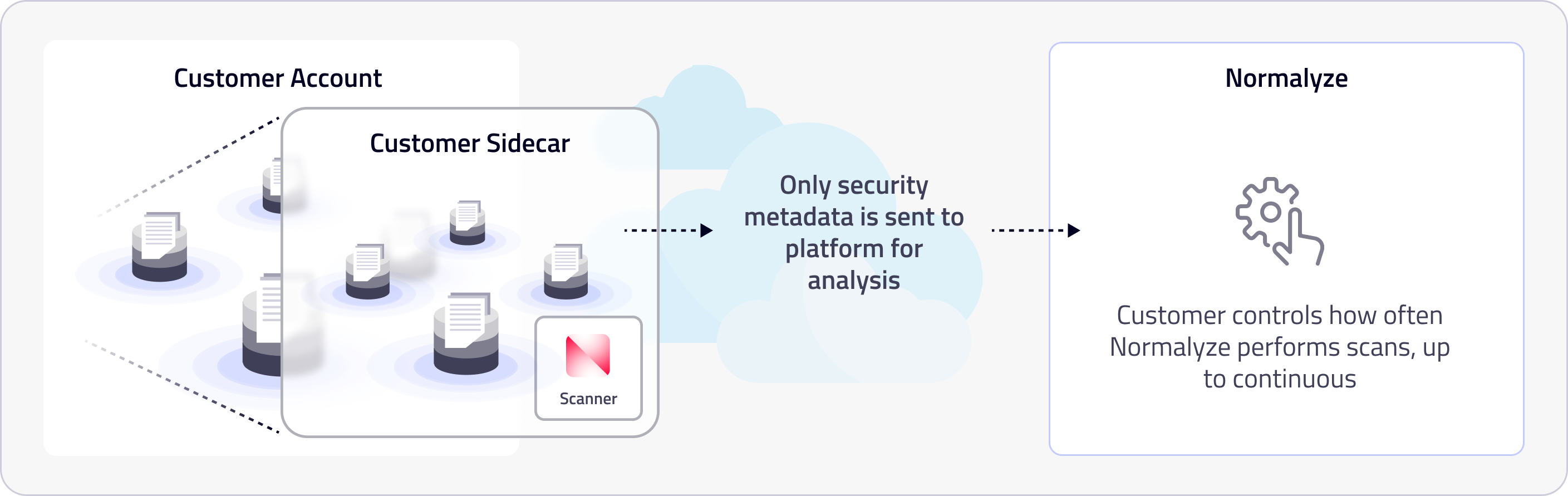

3. Sidecar

Alternative

1

Extract and scan

Risky

What happens to the extracted data?The customer loses control over the extracted data, including that it remains secure during transfer and in the vendor environment, and that it is properly purged after analysis is complete. Visibility into data lineage will likely be lost on extraction, and sovereignty or other compliance issues may be introduced depending on the location and configuration of the vendor environment. |

Who pays the processing costs?In most cases, the cost of scanning data is passed along to the customer, despite the fact that the data is in the vendor environment. While the initial idea is to bypass internal roadblocks, procurement teams will often ask about the costs associated with scanning data since it can often be 2-3x more costly than other approaches. |

2

In-place scanning

Preferred

This method ensures real-time data monitoring and analysis, which is crucial for environments where data sensitivity and immediacy are critical. By analyzing data within its native system, in-place scanning minimizes data exposure to external threats and eliminates the latency and potential security risks associated with data transfer. Unlike the other two approaches, this model does not attempt to bypass vendor due diligence or internal risk assessment/audits.

Advantages of

in-place scanning

This method ensures that security measures are always in step with the latest data, providing a living view of an organization’s data landscape and security posture.

Lower operational cost

Simplified data governance and sovereignty

Continuous compliance

Better trust among teams

Smaller risk footprint

Better data integrity

3

Sidecar model

Alternative

This is ideal for customers with many on-premises data stores, since it balances operational isolation with the convenience of close data proximity, offering a compromise between in-place scanning and external processing. In certain scenarios, Normalyze recommends a sidecar housed within the customer environment. Along with an established process for sidecar maintenance—managed either by the customer or under strict control by Normalyze—risk and compliance teams keep the data under their control while still providing security and data teams with rapid insights on their posture.

Resources

GigaOm Radar for DSPM 2024

Data is the most valuable asset for a modern enterprise, and its proliferation everywhere makes DSPM an essential tool for visibility into where valuable and sensitive data is, who has access to it, and how it’s being used.

The Normalyze cloud-native platform

Learn how we deliver the fastest scanning at scale with the most accurate classification across every data environment.

A Buyer’s Guide to Data Security Posture Management

The 2024 DSPM Buyer’s Guide is designed to help in your research process, clearly define your internal requirements, and make well-informed decisions for your organization.