This blog is the fourth in our series about a new study, Cloud Data Security by TechTarget’s Enterprise Strategy Group (ESG). If you’re just jumping into this series, feel free to dial back to the first post that introduces this study. To summarize, this initiative examined challenges of securing cloud data among 387 IT, cybersecurity, and DevOps professionals who evaluate, purchase, test, deploy, and operate hybrid cloud data security technology products and services at organizations in North America. Normalyze is a co-sponsor of this study. If you’d like to read ESG’s eBook about the entire survey, download a PDF here.

This blog summarizes key points for the survey’s third major finding: Organizations face numerous cloud data security challenges driven by scale, complexity, and visibility.

Top Data Security Challenges Intersect with Data Classification

Security operations professionals face many challenges in the pursuit of keeping cloud-resident sensitive data safe from loss or inappropriate access. ESG says the most difficult processes, as measured by level of difficulty, include five areas:

- Regulatory compliance

- Determining risk associated with sensitive data

- Data governance

- Detection of data exfiltration or misuse

- Prevention of data exfiltration or misuse

Scanning cloud data stores is a fundamental task associated with execution of all five of these requirements. Scanning is the first step leading to discovery of where sensitive data resides in cloud stores. Once sensitive data is identified, the scanning process includes analysis of these data in order to classify what they consist of and which must receive application of appropriate data security policies.

More than two-thirds (70%) of respondents to the ESG survey agree that the scanning process should ideally read 100% of every file, object, database, or other cloud data store. Using a 100% read level eliminates questions about potentially missing sensitive data, but it can incur substantial processing requirements – and take much longer to execute than using a sampling-based approach typically used by an auditor (and acceptable for purposes of compliance).

Recognizing that an organization’s requirements for scanning may vary, the Normalyze cloud platform offers both 100% read level and sampling approaches for scanning.

Overconfidence in Discovery and Classification Efforts for Cloud-resident Data?

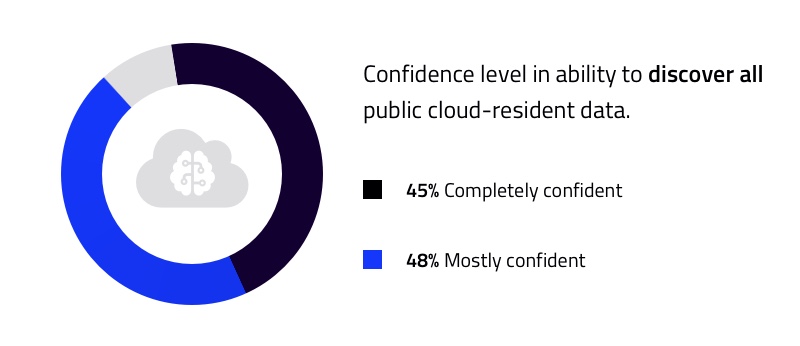

The preference by survey respondents for 100% read-level scanning helps create a higher degree of confidence in discovery and proper classification of sensitive data in cloud stores. The ESG survey found that 93% of respondents were mostly or completely confident in their ability to discover these data. Clearly, however, respondents did not discover as much as they thought given 84% of respondents also say they experienced multiple losses of sensitive data during the last 12 months!

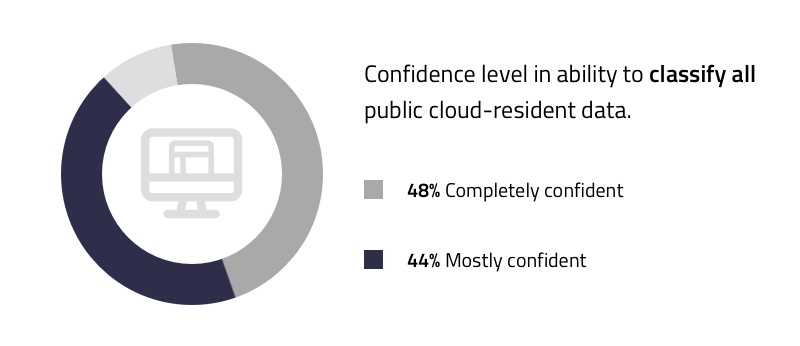

Confidence is also high for the classification of public cloud-resident data. An almost identical 92% of respondents said they are mostly or completely confident in this capability. Ironically, 33% said their organization incurred data loss due to misclassification of data in the last 12 months. One would think errors in classification would be much lower given the general high confidence in classification capability.

Based on these survey data, it’s safe to say respondents are overconfident in their organization’s ability to discover and classify sensitive cloud-resident data.

Our next blog in this series will take a deeper look at the ESG survey’s fourth major finding, that organizations are applying cloud data security technologies, with a desire for integrated data platforms.